I'm currently running 16 web sites on Windows Azure. I have a few Virtual Machines, but I prefer to run things using "platform as a service" where I don't have to sweat the underlying Virtual Machine. That means, while I know I can run a Virtual Machine and put "cron" jobs on it, I'm less likely to because I don't want to mess with VMs or Worker Roles.

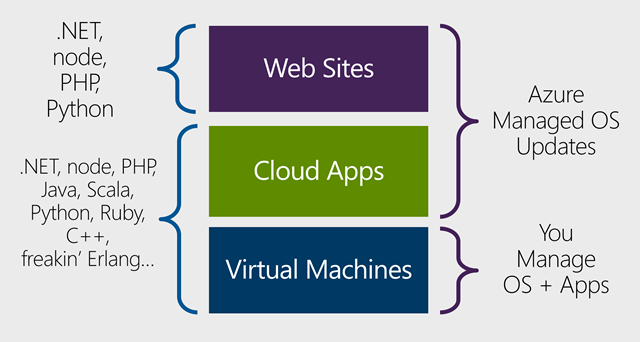

There are a few ways to run stuff on Azure, first, there's IAAS (Infrastructure as a Service) which is VMs. Then there's Cloud Applications (Cloud Services) where you can run anything in an Azure-managed VM. It's still a VM, but you have a lot of choice and can run Worker Roles and background stuff. However, there's a lot of ceremony if you just want to run your small "job" either on a regular basis or via a trigger.

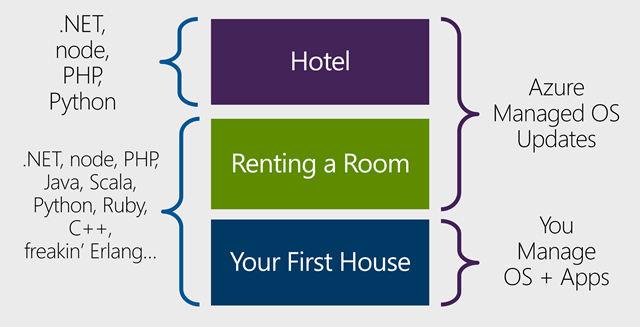

Looking at this differently, platform as a service is like having your hotel room fixed up daily, while VMs is more like managing a house yourself.

As someone who likes to torch a hotel room as much as the next person, this is why I like Azure Web Sites (PAAS). You just deploy, and it's done. The VM is invisible and the site is always up.

However, there's not yet been a good solution under web sites for doing regular jobs and batch work in the background. Now Azure Web Sites support a thing called "Azure WebJobs" to solve this problem simply.

SCALING A COMMAND LINE APPLICATION WITH AZURE WEBJOBS

When I want to do something simple - like resize some images - I'll either write a script or a small .NET application. Things do get complex though when you want to take something simple and do it n times. Scaling a command line app to the cloud often involves a lot of yak shaving.

Let's say I want to take this function that works fine at the command line and run it in the cloud at scale.

public static void SquishNewlyUploadedPNGs(Stream input, Stream output){ var quantizer = new WuQuantizer(); using (var bitmap = new Bitmap(input)) { using (var quantized = quantizer.QuantizeImage(bitmap)) { quantized.Save(output, ImageFormat.Png); } }} |

WebJobs aims to make developing, running, and scaling this easier. They are built into Azure Websites and run in the same VM as your Web Sites.

Here's some typical scenarios that would be great for the Windows Azure WebJobs SDK:

- Image processing or other CPU-intensive work.

- Queue processing.

- RSS aggregation.

- File maintenance, such as aggregating or cleaning up log files.

- Other long-running tasks that you want to run in a background thread, such as sending emails.

WebJobs are invoked in two different ways, either they are triggered or they are continuously running. Triggered jobs happen on a schedule or when some event happens and Continuous jobs basically run a while loop.

WebJobs are deployed by copying them to the right place in the file-system (or using a designated API which will do the same). The following file types are accepted as runnable scripts that can be used as a job:

- .exe - .NET assemblies compiled with the WebJobs SDK

- .cmd, .bat, .exe (using windows cmd)

- .sh (using bash)

- .php (using php)

- .py (using python)

- .js (using node)

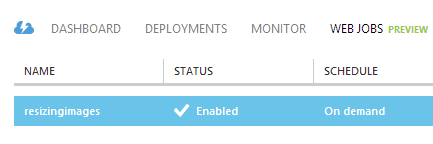

After you deploy your WebJobs from the portal, you can start and stop jobs, delete them, upload jobs as ZIP files, etc. You've got full control.

A good thing to point out, though, is that Azure WebJobs are more than just scheduled scripts, you can alsocreate WebJobs as .NET projects written in C# or whatever.

MAKING A WEBJOB OUT OF A COMMAND LINE APP WITH THE WINDOWS AZURE WEBJOBS SDK

WebJobs can effectively take some command line C# application with a function and turn it into a scalable WebJob. I spoke about this over the last few years in presentations when it was codenamed "SimpleBatch." This lets you write a simple console app to, say, resize an image, then move it up to the cloud and resize millions. Jobs can be triggered by the appearance of new items on an Azure Queue, or by new binary Blobs showing up in Azure Storage.

NOTE: You don't have to use the WebJobs SDK with the WebJobs feature of Windows Azure Web Sites. As noted earlier, the WebJobs feature enables you to upload and run any executable or script, whether or not it uses the WebJobs SDK framework.

I wanted to make a Web Job that would losslessly squish PNGs as I upload them to Azure storage. When new PNGs show up, the job should automatically run on these new PNGs. This is easy as a Command Line app using the nQuant open source library as in the code above.

Now I'll add the WebJobs SDK NuGet package (it's prerelease) and Microsoft.WindowsAzure.Jobs namespace, then add [BlobInput] and [BlobOutput] attributes, then start the JobHost() from Main. That's it.

using Microsoft.WindowsAzure.Jobs;using nQuant;using System.Drawing;using System.Drawing.Imaging;using System.IO;namespace ConsoleApplication1{ class Program { static void Main(string[] args) { JobHost host = new JobHost(); host.RunAndBlock(); } public static void SquishNewlyUploadedPNGs( [BlobInput("input/{name}")] Stream input, [BlobOutput("output/{name}")] Stream output) { var quantizer = new WuQuantizer(); using (var bitmap = new Bitmap(input)) { using (var quantized = quantizer.QuantizeImage(bitmap)) { quantized.Save(output, ImageFormat.Png); } } } }} |

CONTEXT: Let's just step back and process this for a second. All I had to do was spin up the JobHost and set up a few attributes. Minimal ceremony for maximum results. My console app is now processing information from Azure blob storage without ever referencing the Azure Blob Storage API!

The function is automatically called when a new blob (in my case, a new PNG) shows up in the input container in storage and the Stream parameters are automatically

"bound" (like Model Binding) for me by the WebJobs SDK.

"bound" (like Model Binding) for me by the WebJobs SDK.

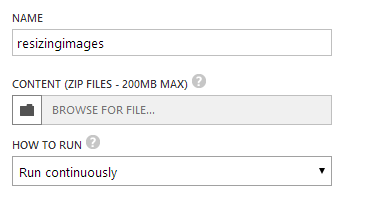

To deploy, I zip up my app and upload it from the WebJobs section of my existing Azure Website in the Portal.

Here it is in the Portal.

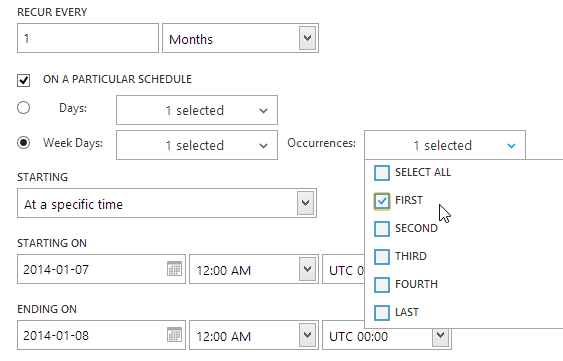

I'm setting mine to continuous, but it can also run on a detailed schedule:

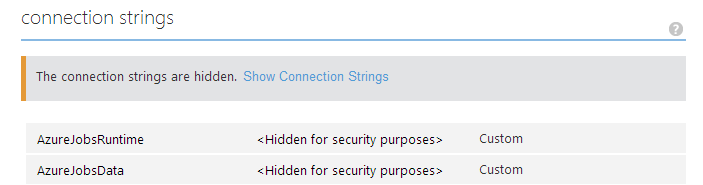

I need my WebJob to be told about what Azure Storage account it's going to use, so from my Azure Web Site under the Connection Strings section I set up two strings, one for the AzureJobsRuntime (for logging) and one for AzureJobsData (what I'm accessing).

For what I'm doing they are the same. The connection strings look like this:

DefaultEndpointsProtocol=https;AccountName=hanselstorage;AccountKey=3exLzmagickey

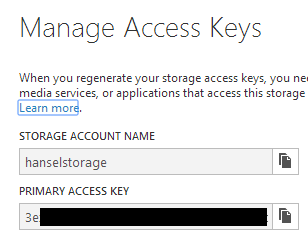

The key here came from Manage Access Keys in my storage account, here:

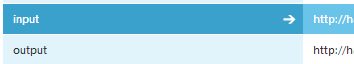

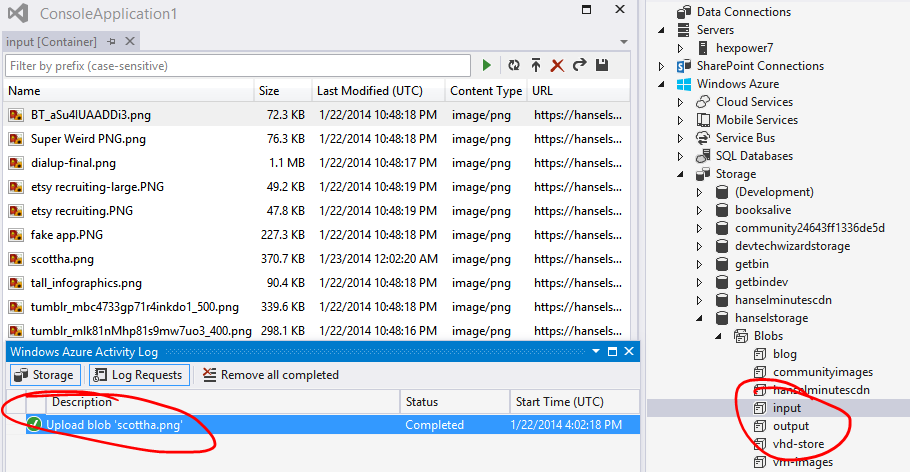

In my "Hanselstorage" Storage Container I made two areas, input and output. You can name yours whatever. You can also process from Queues, etc.

Now, going back to the code, look at the parameters to the Attributes I'm using:

public static void SquishNewlyUploadedPNGs( [BlobInput("input/{name}")] Stream input, [BlobOutput("output/{name}")] Stream output) |

There's the strings "input" and "output" pointing to specific containers in my Storage account. Again, the actual storage account (Hanselstorage) is part of the connection string. That lets you reuse WebJobs in multiple sites, just by changing the connection strings.

There is a link to get to the Azure Web Jobs Dashboard to the right of your job, but the format for the URL to access is this: https://YOURSITE.scm.azurewebsites.net/azurejobs. You'll need to enter your same credentials you've used for Azure deployment.

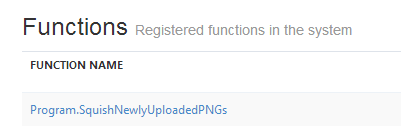

Once you've uploaded your job, you'll see the registered function(s) here:

I've installed the Azure SDK and can access my storage live within Visual Studio. You can also try 3rd party apps like Cloudberry Explorer. Here I've uploaded a file called scottha.png into the input container.

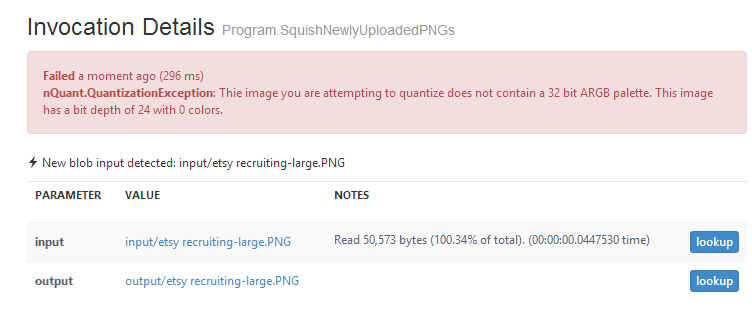

After a few minutes the SDK will process the new blob (Queues are faster, but blobs are checked every 10 minutes), the job will run and either succeed or fail. If your app throws an exception you'll actually see it in the Invocation Details part of the site.

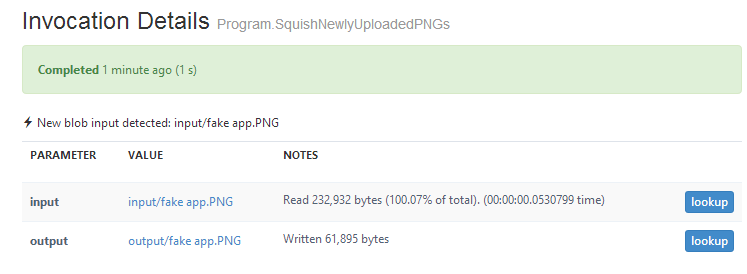

Here's a successful one. You can see it worked (it squished) because of the number of input bytes and the number of output bytes.

You can see the full output of what happens in a WebJob within this Dashboard, or check the log files directly via FTP. For me, I can explore my output container in Azure Storage and download or use the now-squished images. Again, this can be used for any large job whether it be processing images, OCR, log file analysis, SQL server cleanup, whatever you can think of.

Azure WebJobs is in preview, so there will be bugs, changing documentation and updates to the SDK but the general idea is there and it's solid. I think you'll dig it.

RELATED LINKS

- Azure Friday - 10 minute tutorials that help you learn Azure. Also on iTunes!

- WebJobs Getting Started Tutorial

- WebJobs Samples on CodePlex

- Links on how to use Azure WebJobs

- Azure WebJobs on Windows Azure

No comments:

Post a Comment